There Was an Error Loading Log Streams Please Try Again by Refreshing This Page Cloudwatch

The central claiming with modern visibility on clouds like AWS is that data originates from various sources across every layer of the application stack, is varied in format, frequency, and importance and all of it needs to be monitored in real-time by the appropriate roles in an organization. An AWS centralized logging solution, therefore, becomes essential when scaling a production and organization.

To attain this kind of end-to-end visibility requires a witting effort to centralize all the disparate monitoring data irrespective of their source of origin. The focus of this guide is centralizing logs, events, and metrics for cloud-native applications running on Amazon Web Services (AWS).

AWS has past far, the well-nigh comprehensive suite of deject services, numbering 175 services every bit of 2020. Every AWS service churns out its own fix of metrics, events, and logs. Additionally, there are operation metrics produced by the applications running in AWS. AWS provides CloudWatch for centralizing this data. Being a native-AWS service, there is hardly any setup required and CloudWatch automatically records some default monitoring information from many AWS services as soon as they are activated.

To get beyond the basic functionality offered by CloudWatch, AWS users resort to other methods to gain terminate-to-cease visibility in AWS. One choice is to push button all monitoring information from CloudWatch to AWS Elasticsearch which has a more than capable data analytics engine. This is a step up from the default CloudWatch feel, but nonetheless puts the brunt of having to manage Elasticsearch on your shoulders.

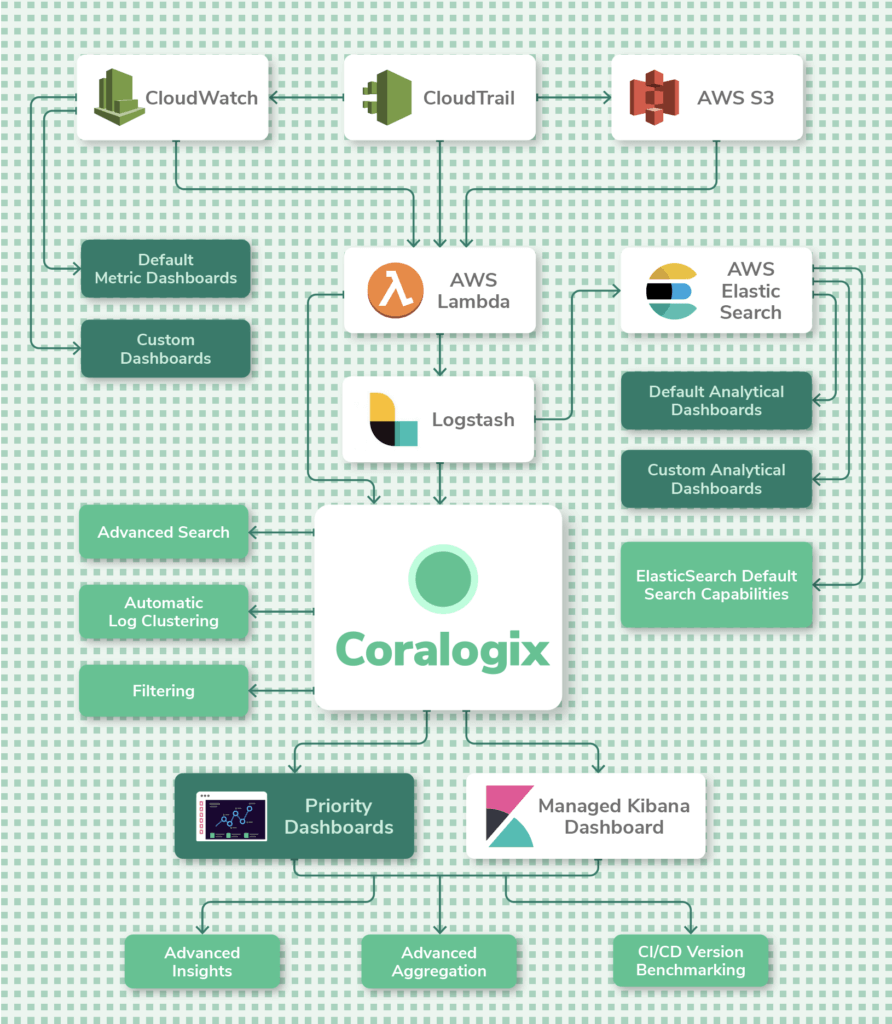

A better option is to apply an AWS Partner Network offer like Coralogix to take the management load off your team and still give them all the benefits of an end-to-cease monitoring feel for AWS.

Part ane – AWS Centralized Logging Nuts

There are numerous types of logs in AWS, and the more applications and services you run in AWS, the more complex your logging needs are jump to be.

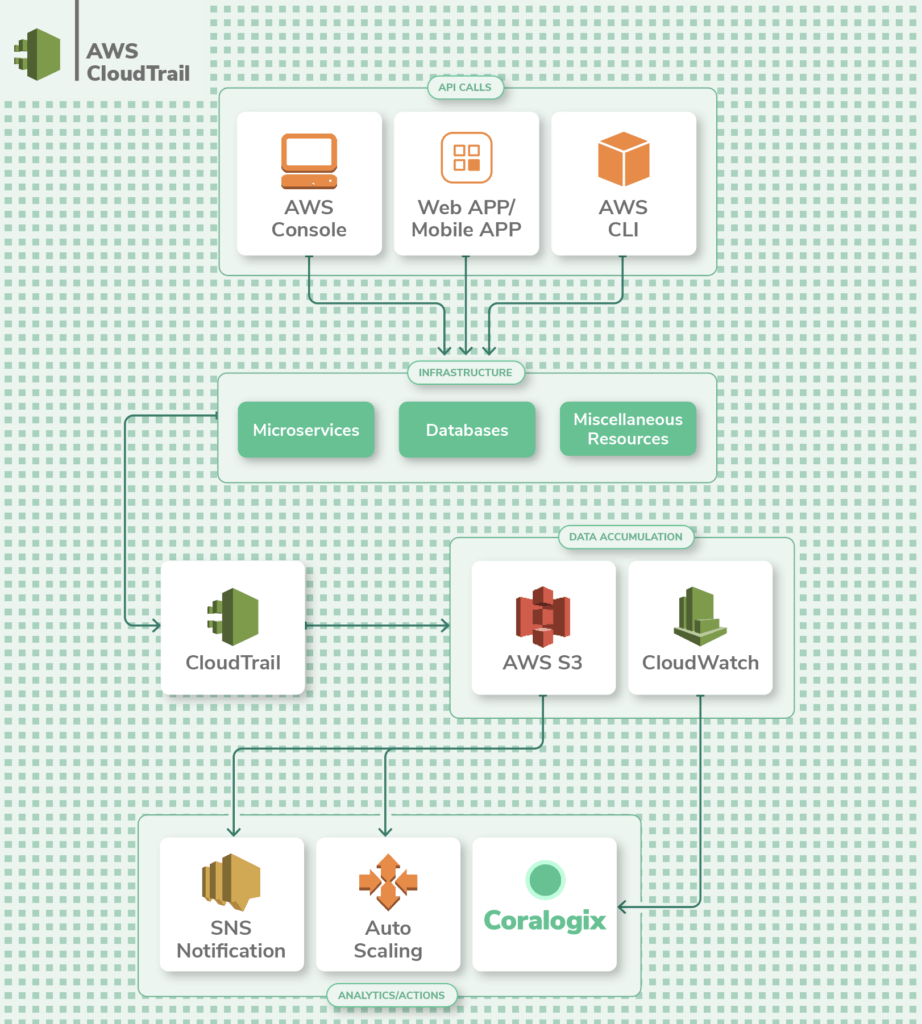

Logs originate from two principal sources – applications running on AWS services, and the AWS services themselves. CloudWatch is the primary log collector that collects logs and metrics about application functioning, and service utilization. Separately, AWS stores all API calls made to AWS services within CloudTrail.

One time nerveless in CloudWatch and CloudTrail, the data is ready for analysis and setting up alerts. From hither, they can be archived in AWS S3, or sent to a separate logging and monitoring solution.

AWS CloudWatch Overview

CloudWatch offers basic log management capabilities such as alerting, actions, log analysis, and querying. CloudWatch tin can generate custom metrics from log data you specify. It's grown into an of import component in the AWS ecosystem and offers many basic log and metric management capabilities.

AWS CloudTrail Overview

To gain a holistic view of your AWS applications and resources, y'all'll need not only application and service-level logs, just API-level logs as well. This is handled by AWS CloudTrail.

CloudTrail tracks actions taken by a user, function, or an AWS service whether taken through the AWS panel or API operations. In contrast to on-premise infrastructure where something equally important equally network flow monitoring (Netflow logs) could take weeks or months to become off the ground, AWS has the ability to rail menstruum logs with a few clicks at a relatively low price.

Some bones tracking is enabled by default with AWS CloudWatch and CloudTrail, just you should review the configuration and apply this guide to apply the most important all-time practices.

Most services publish CloudTrail events but merely save the most recent events from the past 90 days. In order to salve data long-term, you'll need to create a Trail and enable continuous commitment to an S3 bucket. By default, when y'all create a Trail, it captures data from all regions.

Metrics, Events, and Logs

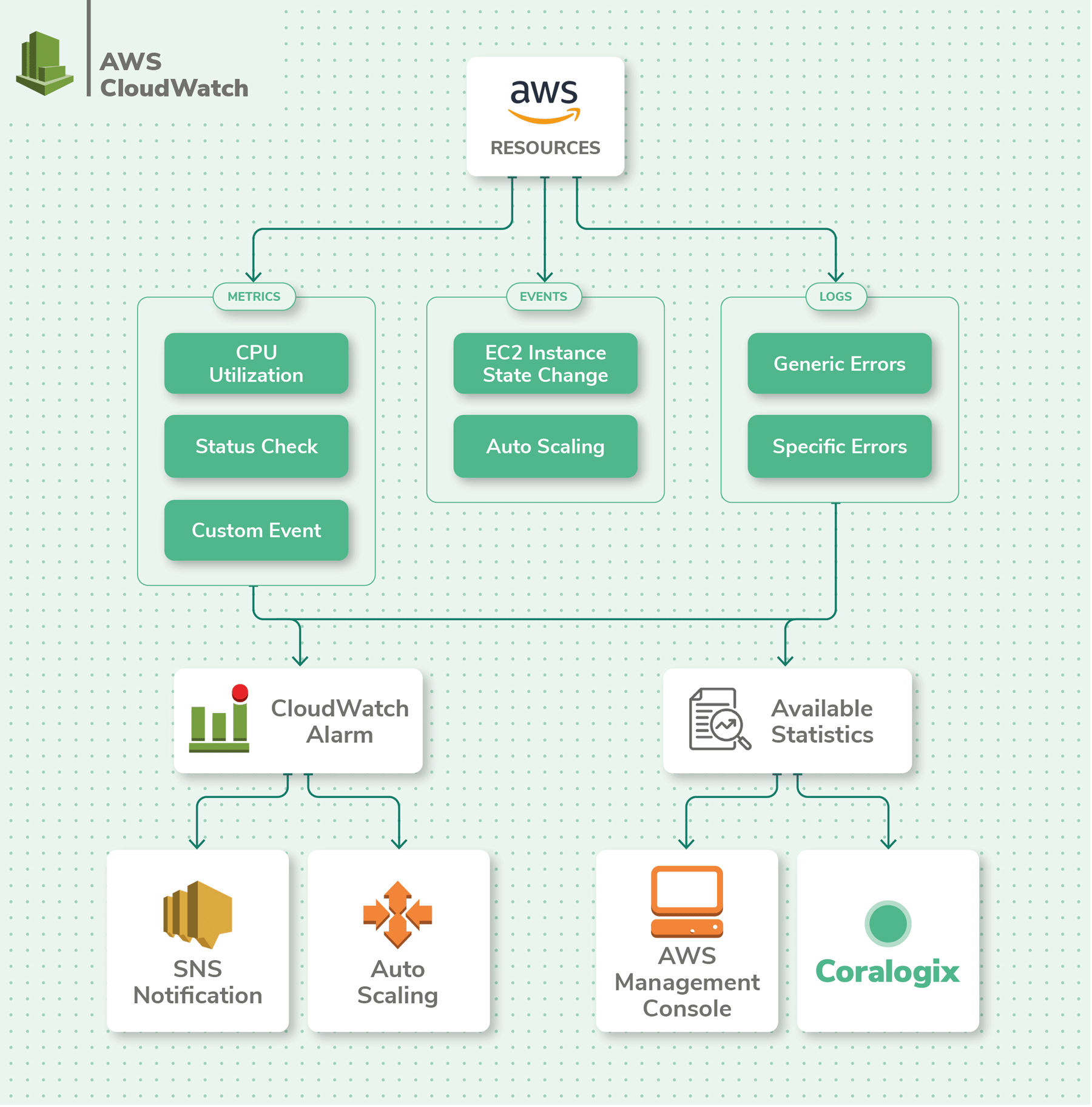

Monitoring with AWS CloudWatch can be confusing if you're not familiar with the different types of data bachelor to you lot. In that location are 3 types of monitoring data in CloudWatch – Metrics, events, and logs.

Logs

Logs can have more than i slice of information in them, whereas metrics are specifically focused on just the one information signal they measure. Events are generated whenever something changes in an AWS service. CloudWatch Subscriptions allow other AWS services like Kinesis and Lambda to listen to a real-time feed of CloudWatch Logs for further processing or to transport it to another service like Coralogix. Further, you lot can use subscription filters to define which logs get sent to specific AWS resources.

Metrics

Metrics are numeric data points that report on one specific aspect of the system'southward performance. Past default, metrics are recorded in ane-minute intervals – this is called resolution. A finer resolution of 1-second intervals is available in CloudWatch by upgrading to paid custom metrics.

Events

Events are generated when a change is made to an AWS service in near real-fourth dimension, and they are saved equally JSON objects. Examples of an event are when an EC2 example is created, or an AWS API is accessed. These events are recorded past CloudWatch and can be acted upon. For example, you could set upwards an event rule to notify an AWS SNS topic whenever an EC2 instance is terminated.

Pop AWS Services

Hither is a list of the most vital AWS services and what you should consider when setting up logging with CloudWatch and CloudTrail. Below, you'll as well detect a tabular array with a more complete list of AWS services.

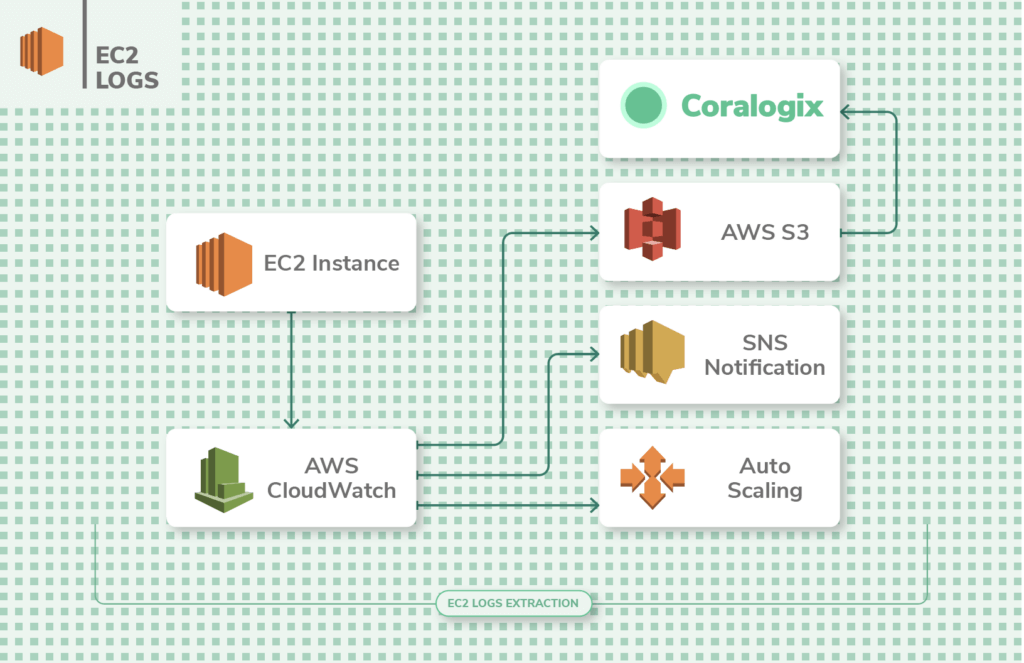

EC2 Logs

AWS EC2 is the well-nigh popular and widely-used AWS service. Information technology offers cloud-based compute instances to run applications on. The instances come in Linux and Windows flavors, and of diverse compute capacities.

To start collecting logs from EC2 you need to configure the advisable IAM policies and roles. Then, you lot'll need to install the CloudWatch amanuensis using a single-line command from the AWS CLI. Once you configure the amanuensis, logs start streaming from the EC2 instances and are sent to CloudWatch for analysis.

Yous'll want to analyze the EC2 error logs when troubleshooting issues with instances. The logs are sent to CloudWatch for analysis and can be stored in S3 buckets for archiving. The key metrics that are logged from EC2 instances are deejay I/O, network I/O, and CPU utilization.

How to: Integrate ECS / EC2 Logs with Coralogix

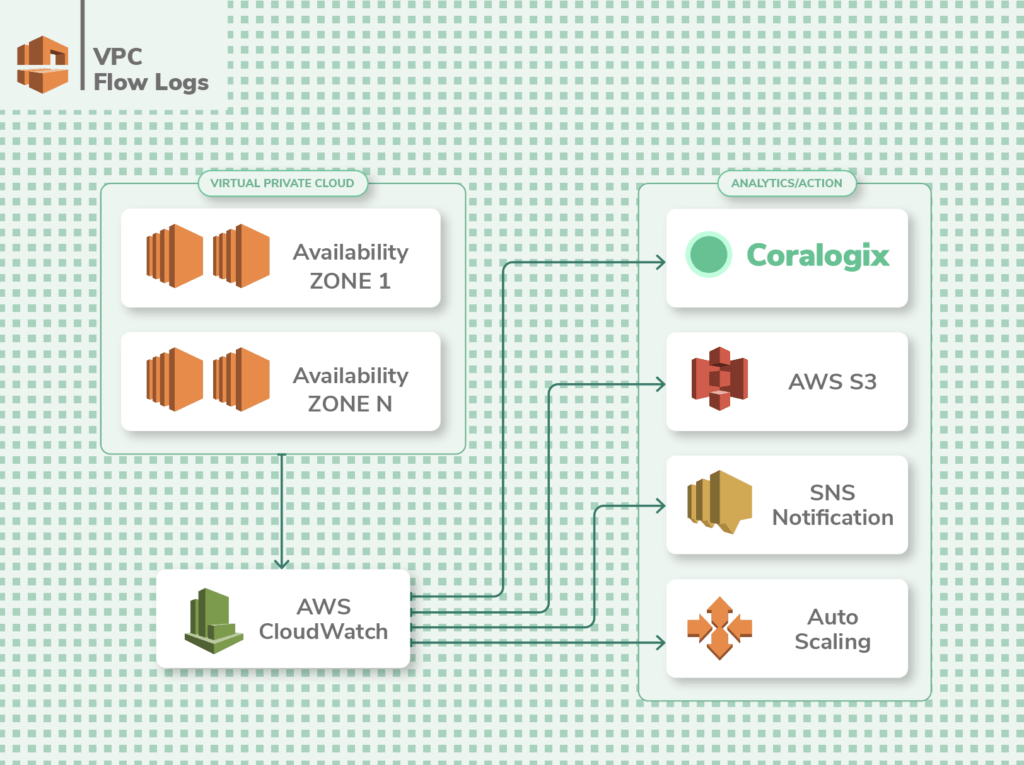

VPC Flow Logs

If the default shared EC2 instances of AWS aren't acceptable for your application'due south security and performance needs, AWS VPC is a service that enables you to take a individual EC2 feel where you lot command the network. This essentially gives you your own private space in the AWS cloud where simply your apps run, and even so, you don't accept to maintain the underlying EC2 instances, as AWS does the maintenance for yous. Once VPC is prepare, it is essential to monitor the traffic flow to and from the network interfaces (Ingress & egress).

VPC Flow Logs incorporate information about the traffic passing through your application at any given time. Information technology lists the requests that were allowed or denied co-ordinate to your ACL (access control listing) rules. It also has information almost the IP addresses, and ports for each asking, the number of packets and bytes sent, and timestamps for each request.

This information brings deep visibility into your VPC-based applications. Using these logs y'all tin can optimize your existing ACL rules, and make exceptions to allow or turn down certain types of requests.

You can also ready alarms to be notified of suspicious requests. However, you lot tin't tag Flow Logs. Besides, once created, you tin't change the config for a Catamenia Log, you'll demand to delete it and create a new one. And finally, yous tin publish and shop Menstruum Logs to CloudWatch Logs or S3 at additional cost.

How to: Integrate VPC catamenia logs with Coralogix

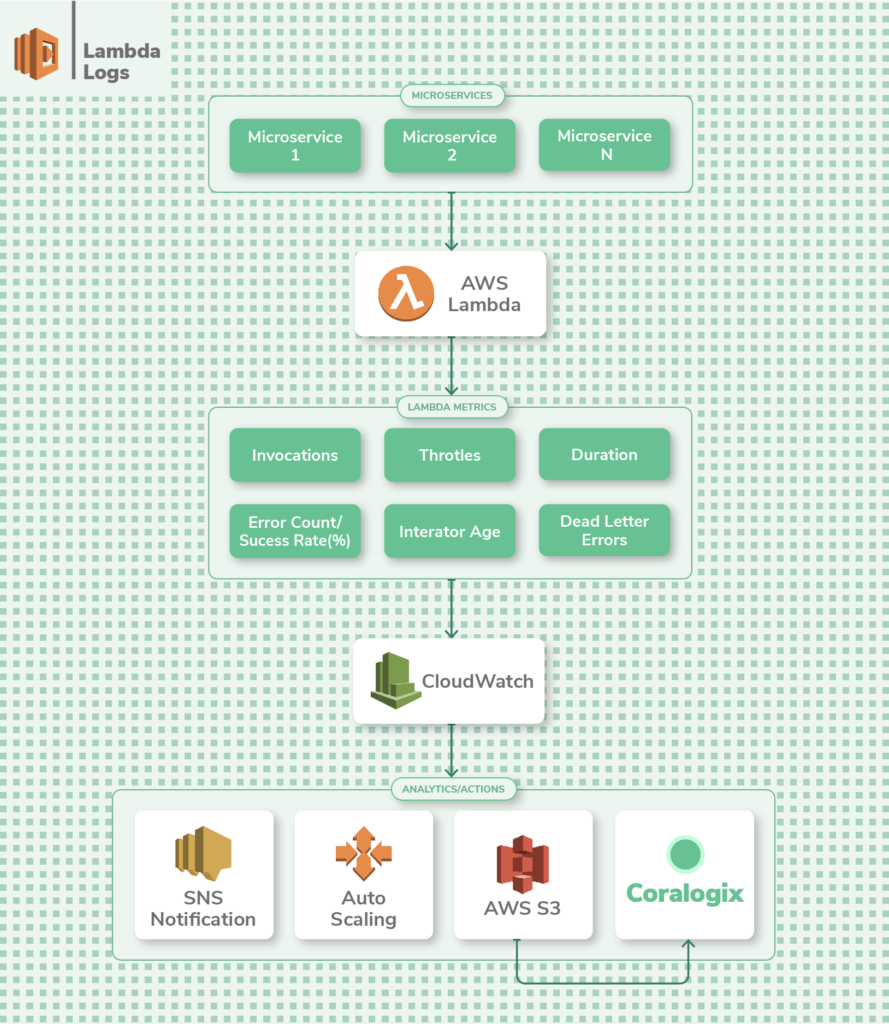

Lambda Logs

Lambda is the serverless computing solution from AWS that lets y'all run applications without having to create or maintain any underlying instances. Information technology excels for brusque-term jobs that require compute capacity in short bursts.

The CloudWatch dashboard provides you with vital logs and metrics such every bit the number of invocations of a function, duration of an invocation, errors, and throttles. This is smashing for gaining an overview of your application's health.

To drill deeper into the performance of your Lambda functions, you'll need to insert logging statements inside the lawmaking of each part. Retrieve to assign the appropriate execution role so that the Lambda function has permission to publish logs to CloudWatch.

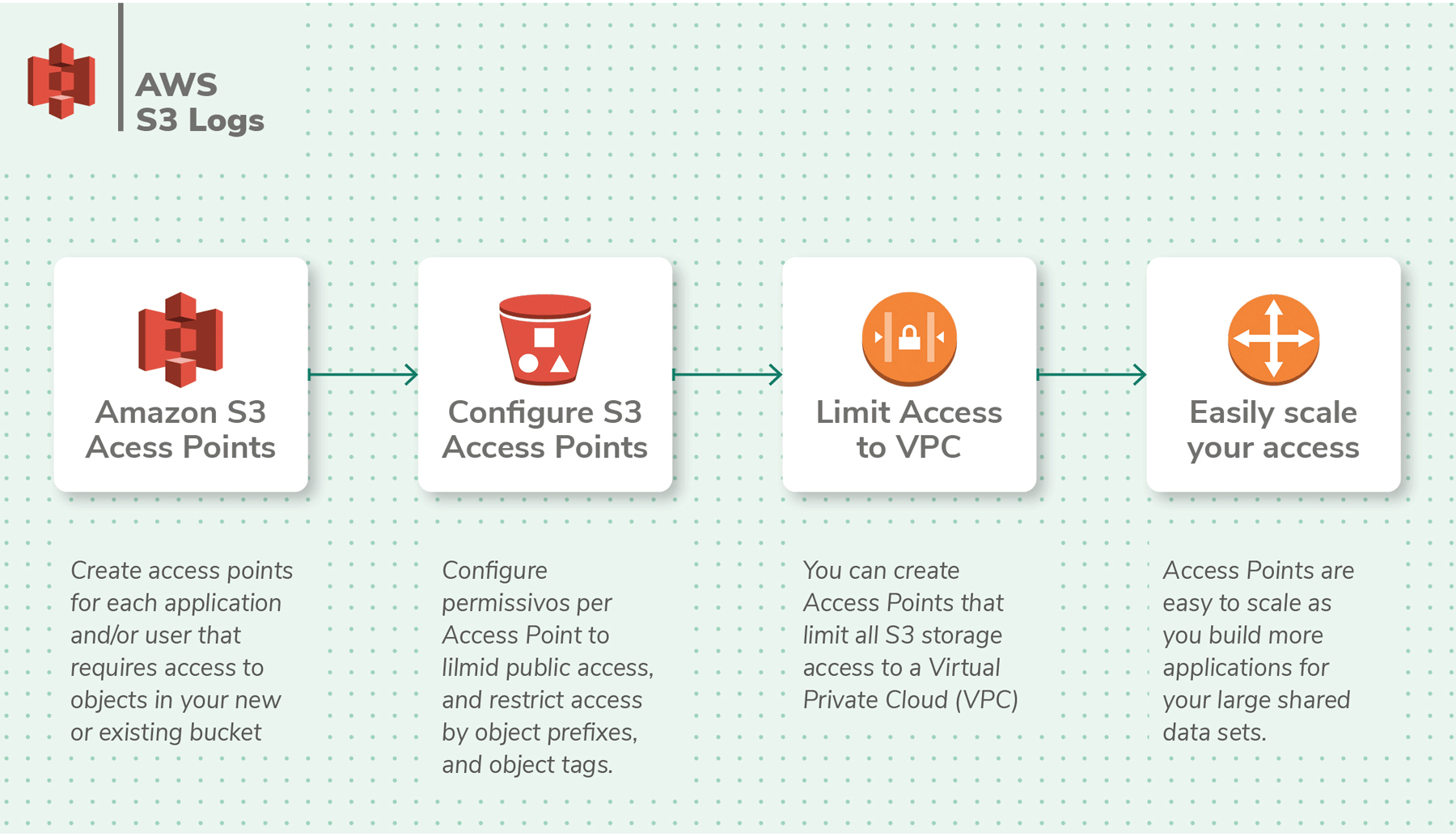

S3 Logs

AWS S3 is the beginning service that AWS started with and it plays a vital role is storing data, including logs, from diverse other AWS services. Yous need visibility into S3 performance itself, but arguably the nigh of import type of S3 logs are the server access logs.

The logs provide visibility into each call made to an S3 bucket from other AWS services or applications. It includes details like the source of the asking, name of the S3 bucket, request time, error, and response codes. By default, server admission logs are disabled and need to exist enabled.

Going deeper, object-level logs that are tracked by CloudTrail monitor API calls to S3 and the changes they brand to the actual objects stored within S3. As with the server admission logs, object-level logs need to be manually setup.

The easiest way to get started with S3 logs is through the S3 management console to ready a destination bucket to send the logs to.

How to: Integrate S3 logs with Coralogix

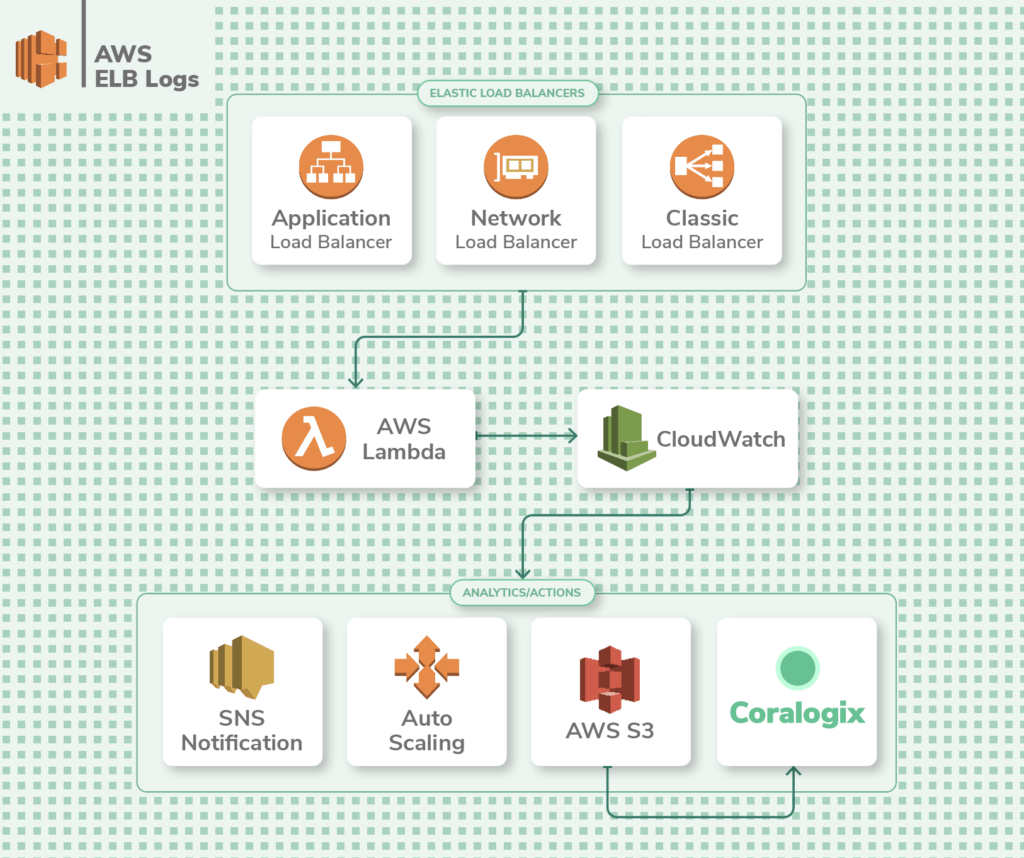

ELB Logs

AWS Elastic Load Balancer (ELB), as the name suggests, is a load balancer service that routes traffic across diverse AWS services. It is used to handle spikes in traffic.

Being a networking management service, it's vital to monitor ELB to gain insight into application functioning. ELB generates logs such as the source of requests, latency, timestamps, and errors. These are called access logs and tin be stored in an S3 saucepan. They're not enabled by default and need to be configured. The just boosted toll volition be the S3 storage fees.

How to: Integrate ELB logs with Coralogix

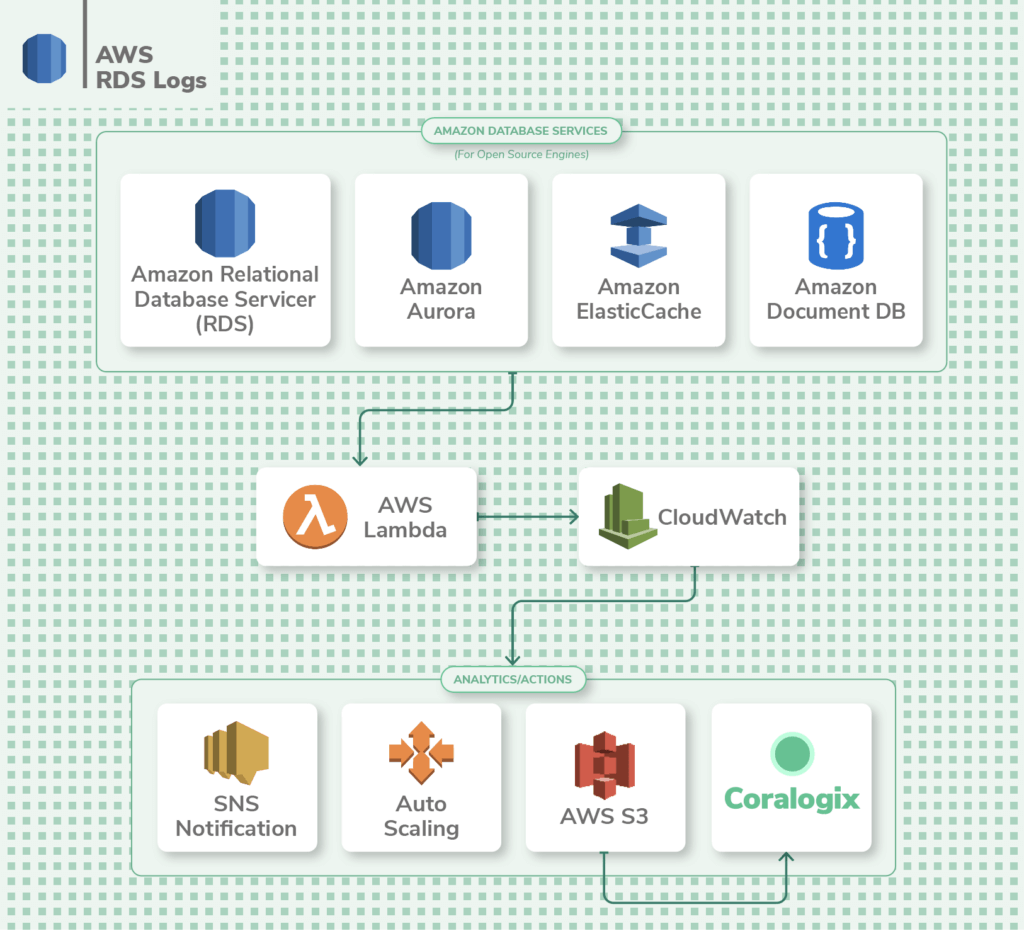

RDS Logs

AWS Relational Database Service (RDS) is a managed database service that makes it easy to scale and operate relational databases. You tin run a multifariousness of database engines on RDS such as MariaDB, Microsoft SQL Server, MySQL, Oracle database, and PostgreSQL database.

Y'all can view database logs from within the RDS console or publish logs to CloudWatch for further analysis. By default, error logs are generated in RDS, just you'll demand to configure additional logs like tedious query, audit, and full general logs for optimization and troubleshooting.

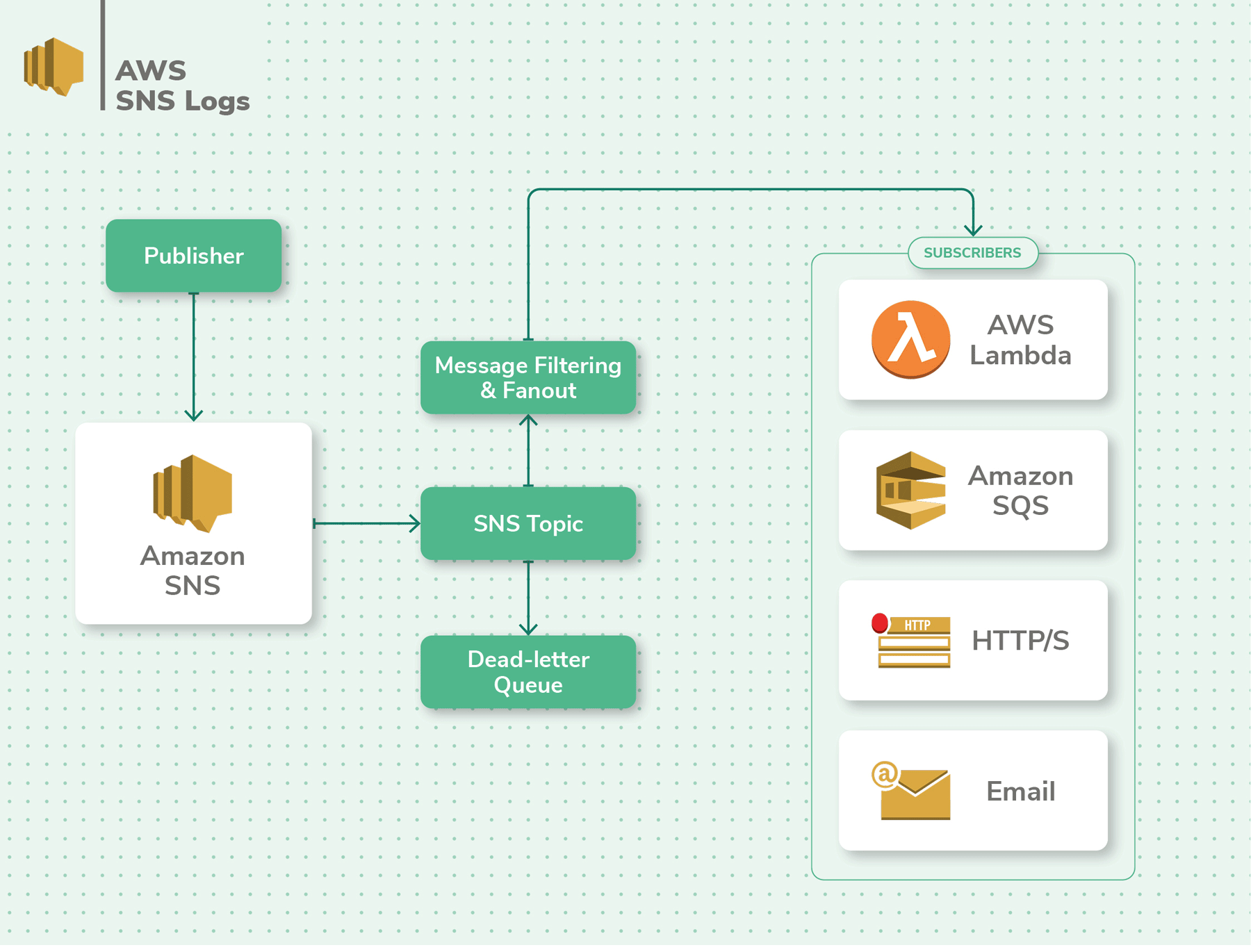

SNS Logs

AWS SNS is a pub/sub messaging service to send out messages to other AWS services or even end-users via SMS or electronic mail. These messages tin can be used past services like Lambda every bit triggers to begin parallel execution of a job. SNS makes it easier to manage communication internally between distributed microservice applications.

When monitoring SNS topics, yous volition want to monitor the volume of messages, failed notifications, the reasons for their failures, messages that are filtered out, and the volume of SMS's sent. All of these metrics are conveniently bachelor within CloudWatch. Additionally, CloudTrail stores data near the API calls fabricated to SNS. In CloudTrail, you'll need to configure a trail to runway all SNS events from all regions in an S3 saucepan.

Function 2 – AWS logging workflow

Once you've decided which services you'd like to monitor using CloudWatch and CloudTrail, the next step is to analyze these logs to glean meaningful and actionable insights.

Collecting

Equally with whatever logging practise, the first step to AWS logging is to collect logs from diverse sources. To outset collecting logs AWS has a unified logging agent that collects both logs and advanced metrics. In that location are many means to install the agent on your EC2 instances and other AWS services depending on where your instances are running. You lot'll also demand to configure the awslogs.conf file that specifies the log grouping, log stream, fourth dimension zone, and more. Some AWS services can send logs directly to S3, but CloudWatch "Evangelize Logs" costs would however utilize.

Parsing

Parsing unstructured logs is disquisitional in order to extract the full potential value of the information and make it fix for analysis. Parsing enables us to get statistics on log bulletin parameter values, comport faceted searches and filter logs by specific fields and values.

In CloudWatch, parsing is supported at the query level only, but the underlying log data cannot exist changed. For full parsing and enriching capabilities, you'll need a 3rd political party tool similar Coralogix or forward the logs to Logstash (with the CloudWatch input plugin) for parsing with Grok and then feeding that into AWS Elasticsearch.

Querying

Querying is likely the nigh common operational task performed on log data. The right searching capabilities enable yous to analyze logs to detect insights easier.

For AWS logs, we have several options to query the data:

CloudWatch: Includes aggregations, filters, regular expressions, and machine-discovered JSON fields

Awslogs: This is a simple command-line tool for querying groups, streams, and events from Amazon CloudWatch logs. It allows y'all to query across log groups and streams with log assemblage and offers more user-friendly features like homo-friendly fourth dimension filtering.

AWS Elasticsearch: AWS' hosted Elasticsearch service

Coralogix: A fully managed Elasticsearch service with advanced search, filtering, and automatic log clustering capabilities.

Monitoring

Dashboards help you track the most important metrics and then you're always enlightened of the state of the arrangement. AWS CloudWatch comes with multiple visualization options that you can brand use of. You tin can create dashboards from where y'all can monitor metrics that are derived from your logs. But create a log query in CloudWatch and add together information technology to a dashboard. For case, yous can calculate statistics like percentiles and aggregations. You can then visualize the data in the form of a line nautical chart, a stacked chart, or a numerical metric. Taking things further, yous can add alarms to widgets for quick and simple monitoring.

When it comes to monitoring an unabridged infrastructure, Coralogix provides fully managed Kibana admission as well as proprietary dashboards that provide avant-garde insights.

Setting up CloudWatch alarms

When things go wrong, alerts are essential for reducing response and recovery times. CloudWatch offers the ability to prepare alarms or alerts on any widget in a dashboard. Yous can configure CloudWatch Logs to set off an warning whenever a metric reaches a divers threshold.

Alarms have configurable resolutions between i-60 second intervals so you tin can decide how often to run the query for each metric. CloudWatch also offers the power to trigger automated actions beyond AWS services based on a triggered warning to create sophisticated workflows.

Autonomously from CloudWatch, within AWS you lot setup Lambdas to trigger alerts also. Using scheduled event triggers in AWS Lambda, you tin can run a query and then publish the results to an Amazon Simple Notification Service (SNS) topic which tin then trigger an email or initiate an automated action.

Users that need more avant-garde monitoring and alerting capabilities will need to integrate a tool like Coralogix. Examples include filtering by log metadata, grouping by particular fields, limiting triggering to specific times, customizing warning messaging and automating alerting with ML-assisted anomaly detection. The Coralogix integration with CloudWatch allows AWS customers to aggregate all of their log data combined with data from other sources across hybrid and multi-cloud environments.

Exporting

Users may need to consign logs from CloudWatch for archiving, sharing, or to analyze the information further with advanced 3rd party tools. AWS provides several different ways of getting your log information to the right source.

- Export logs from CloudWatch to S3

- Stream directly from CloudWatch to AWS Elasticsearch

- Stream directly from CloudWatch to AWS Kinesis

- Use Lambda functions to pass the information to a 3rd party solution like Coralogix

- Using Bash scripts from AWS CLI, you can export up to 10,000 logs per asking while specifying log streams and groups

- Use the open-source awslogs tool to download the information to your estimator

Part 3 – Managing AWS Logs

While day-to-day logging operations is what takes more fourth dimension in CloudWatch and CloudTrail, ofttimes, you'll demand to brand important decisions about how to manage your logs. This includes securing access to log information, retaining them for use in the future, and keeping costs nether control.

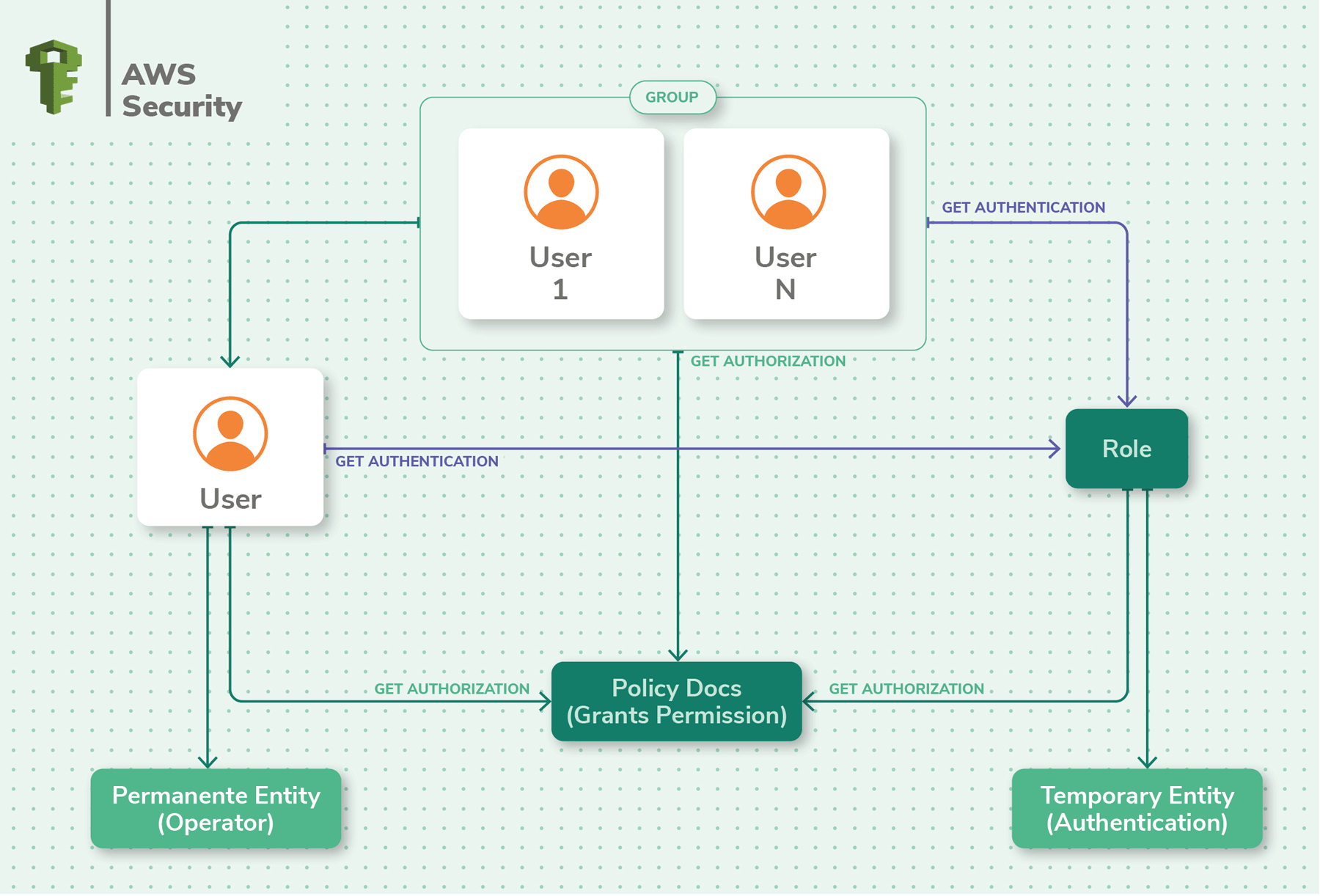

Security & Compliance

AWS has baked in security all-time practices into its platform. For example, log information is encrypted at rest and during transfer. The IAM service enables y'all to control access to log information and give granular access to users and other applications. Further, CloudTrail is dandy for recording all activity related to logs for auditing and compliance purposes.

While AWS has done its part, according to the 'shared responsibility' model, you'll need to do your part in securing log data in AWS. For example, in AWS S3, you tin enable "MFA delete" (Multi-gene hallmark delete) to protect from accidental deletions or sabotage of log data. Using Cloudwatch Events, you can automatically detect when an instance is being shut down and offload log data before the shutdown is complete.

Log Retention

Log retention is critical for operational and compliance purposes. Sometimes a data breach could be discovered years after information technology really occurred. In cases where historical data is necessary, yous need to accept your logs retained and AWS CloudWatch lets you retain log data for as long equally yous like.

When new CloudWatch Log Groups are automatically generated (due east.g. when new Lambda Office is created) it will always default to "Never Expire" so this is something that needs attention.

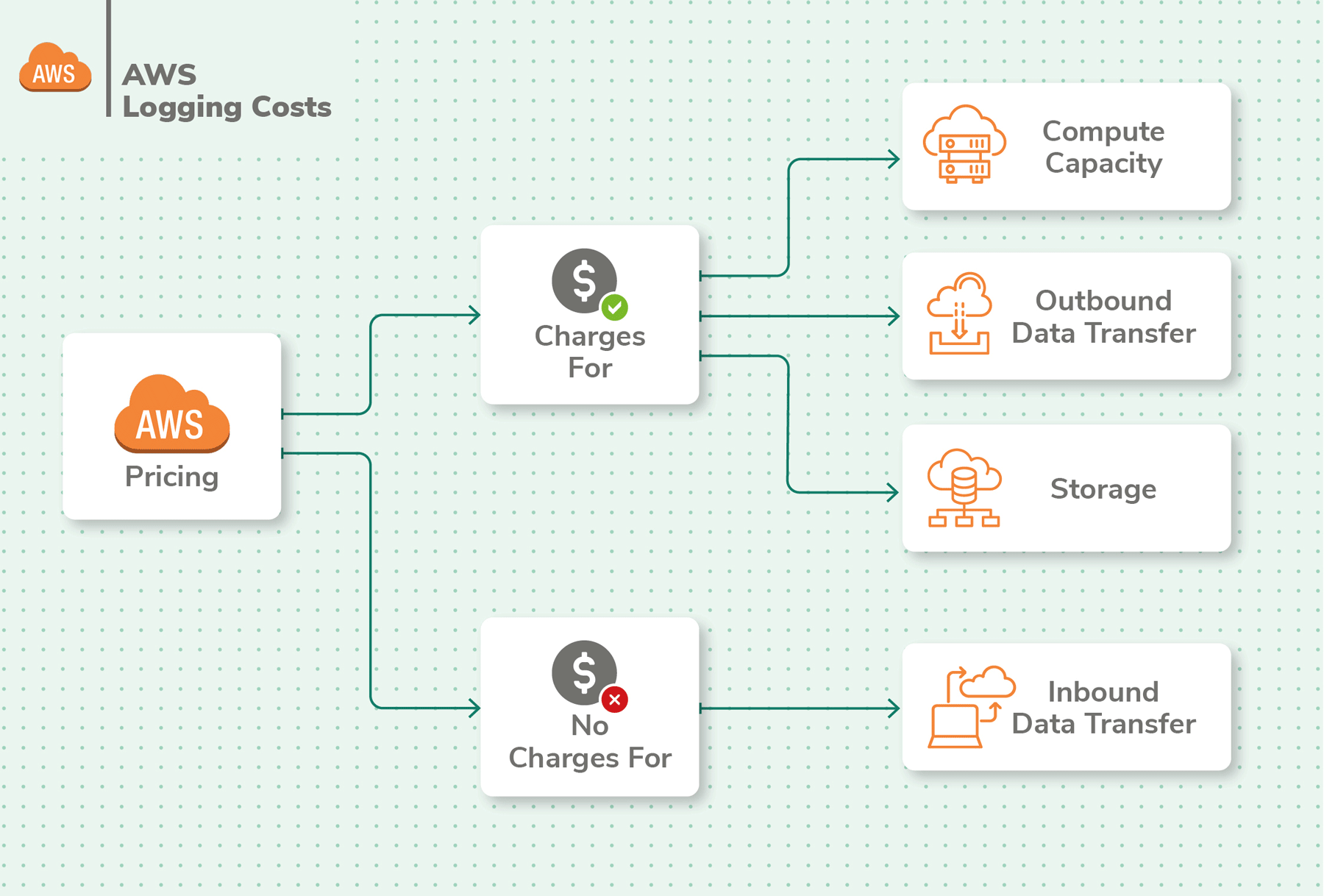

AWS Logging Costs

Cost is an important consideration when it comes to logging in the AWS cloud. AWS has a Gratuitous Tier with bones logging, and storage space of 5GB of ingested log data and 5GB of archived data. Most applications' logging needs volition calibration beyond this tier. If left unchecked, costs can quickly shoot upwardly and result in bill stupor at the end of the calendar month.

The costs for logging in AWS vary based on the region you choose. You lot also need to cistron in metrics, logs, events, alerts, dashboards, retention, archiving, data transfer and more to arrive at your final cost with AWS CloudWatch. Given the diverse options to choose from, pricing tin can be circuitous with AWS CloudWatch.

Costs also matter for metrics. Y'all get merely 10 detailed monitoring metrics in the complimentary tier, limiting your ability to practise in-depth monitoring. Additionally, but 3 dashboards and 10 alarms are included in the gratuitous tier. Custom events have additional costs too.

Logs published by AWS services are priced like custom logs. If you store a lot of logs in AWS, in that location's a discounted vended log pricing which is subject to volume discounts, but currently, this only includes VPC and Road 53 logs.

In guild to have better visibility into your CloudWatch costs, you tin can tag Log Groups, through the API (it isn't currently possible via the console).

Part iv – AWS Centralized Logging

Logs are generated regionally by AWS services and so the best practise is to funnel all regional logs into one region in order to clarify the data beyond regions. At that place are three options to centralize your AWS logs.

Use CloudWatch for your centralized log collection and then push them to a log analysis solution via Lambda or Kinesis.

Send all logs directly to S3 and farther procedure them with Lambda functions.

Configure agents like Beats on EC2 instances and FunctionBeat on Lambdas to button logs to a logging solution.

Once gathered together in the aforementioned region within AWS, the logs tin exist pushed to a more powerful logging solution like AWS Elasticsearch or an AWS partner service such as Coralogix.

Earlier embarking on a self-managed solution such every bit the ELK stack, consider the full costs and complication of each approach. While AWS Elasticsearch is easier in terms of management, you'll still exist responsible for maintaining and scaling its usage.

Basic to Advanced Logging

There are three major options when deciding how to analyze your AWS logs centrally – AWS CloudWatch, AWS Elasticsearch, and an AWS partner solution like Coralogix. Let'south compare these options in the following table.

| Features | CloudWatch | AWS Elasticsearch | Coralogix |

|---|---|---|---|

| Service | Managed | Self-managed | Fully-Mangged |

| Error Anomalies | No | No | ML, Automated |

| Volume Anomalies | No | No | ML, Automated |

| Instant Log Clustering | No | No | Aye |

| Dashboards | Bones | Cocky-managed Kibana | Managed Kibana, Proprietary Custom Dashboards |

| Alerts | Basic, cost per metric | Basic | Avant-garde dominion configuration options |

| Parsing | Automatic JSON field detection Parsing unstructured data is but possible while querying | Self-managed Logstash | Automatic JSON field detection Custom parsing rules |

| Querying | Results are limited to max 10,000 logs Maximum of 4 concurrent CloudWatch queries Queries cost $0.005 per GB of data scanned (The states E) | Unlimited, but can go slow if data is not organized well | Lightning fast functioning Unlimited log results Unlimited queries at no boosted toll |

| Ingest | Maximum batch size: ane MB Maximum event size: 256KB | Multiple options for ingesting – Kinesis Data Firehost, Logstash, CloudWatch Logs. Tin can be difficult to convert and map data. | Simple data ingestion from AWS and non-AWS sources without limitations |

| Threat Detection | No | No | IP Enrichment |

| Live Tail | No | No | Aye |

| Pricing | Usage-based, can start small but abound big very quick Difficult to summate various nuances with pricing. | Usage-based, can start small but grow big very quick. Demand to factor in additional resource costs of setup and maintenance | Uncomplicated tier-based pricing with clearly-defined features, and few restrictions |

Centralizing AWS Logs with Coralogix

Coralogix is a cloud-based log analytics tool available via the AWS Marketplace for convenient billing and tighter integration. It improves on AWS CloudWatch in many means and is an advanced logging solution for AWS. Here are the key advantages of Coralogix over CloudWatch:

- Faster query speeds

- Alerts on aberrant behavior (using ML)

- Existent-time log analysis (using Live Tail)

- Unlimited Dashboards

- Unlimited queries

- Unlimited API calls

- Team management and collaboration

- Meliorate user experience

- Integrated threat detection

- White-glove user support

Shipping logs from AWS to Coralogix is a breeze with two options. You can either set up a Lambda function to transfer logs from a Kinesis stream to Coralogix, or y'all tin gear up a Beats agent to mind in on logs and ship them to Coralogix automatically. In one case in Coralogix, you tin leverage the powerful logging capabilities of the platform to glean insights into your logs, complete with dashboards, visualizations, and mature alerting.

Primal Takeaways

Finally, here are the key takeaways that you should remember as you think ahead virtually your logging strategy:

- Understand AWS logging options – CloudWatch, CloudTrail – and how they relate to other AWS services like EC2, ECS, S3, Lambda and more. A good understanding of the landscape will assistance you know your logging requirements for AWS and program accordingly.

- All common logging operations like collecting logs, parsing them, querying them, and more than are possible with AWS CloudWatch, simply you practice need to keep in heed the restrictions and quirks each of them have.

- Every bit an Information technology admin or the person who manages the logs of your visitor'southward applications, you should be concerned about the security of log data, about how to retain as much log data as needed, and how much all this costs.

- Centralizing AWS logs is important if yous want to glean maximum value from your AWS log information. There are many approaches. While you can use AWS CloudWatch to centralize logs, you would sacrifice on advanced features like advanced searches and anomaly detection using car learning. Also, with a complex pricing model, you'd have to remember twice before enabling a new feature or archiving boosted log data.

- A better mode is to ship all your logs to a logging solution that has none of the drawbacks of AWS CloudWatch, and that delivers benefits that can't be achieved in CloudWatch alone. Coralogix is one such logging solution that delivers advanced features that are based on automobile learning. It is like shooting fish in a barrel to set, and even easier to analyze your log data for insights. With a uncomplicated pricing model, y'all won't have to count dashboards or delete older logs to save costs.

AWS Logging Cheat Canvass

| AWS Service | What to log | Where | Delay | How |

|---|---|---|---|---|

| CloudFormation | EC2 application bootstrapping logs | CloudWatch | 5 seconds past default, tin be inverse | In the CloudFormation template1) create a CloudWatch Logs configuration file (cfn-logs.conf) on the instance 2) download the awslogs package iii) first the awslogs daemon You can use a single CloudWatch Logs configuration file to use across multiple stacks |

| CloudFront | Access requests | S3 | Up to 24 hours | Select the S3 bucket where your CloudFront logs will exist saved and configure the ACL (access control list) permissions for the saucepan. |

| CloudTrail | Collect and monitor whatsoever AWS API call, complete audit trails of all AWS account activity such as security policy changes, new instances, console logins, etc | S3 | 15 minutes (default) | By default, CloudTrail logs are activated for all new accounts with 90-day memory. By default, logs saved per region (except for Global Service Events). Therefore, set up global logging to 1 bucket.Config: "Apply trail to all regions" To evangelize log data to S3, create Trails in CloudTrail. Options include Encryption and Log Data Integrity Validation |

| CloudWatch Events | AWS API changes for diverse AWS services like EC2, Lambda, Kinesis streams and more | CloudWatch | Real-fourth dimension | Create a rule that triggers whenever something happens, or an API call is made, or on a fix schedule. |

| CodeBuild | API calls | CloudTrail | xv minutes | Via CloudTrail |

| CodePipeline | API calls | CloudTrail | 15 minutes | Via CloudTrail |

| Config | Resource configurations and changes. You can validate resource compliance with a set of rules | CloudTrail SNS Topic S3 | 15 minutes | Employ default "Managed Rules" or "Custom Rules" Setup by Console or CLI Choice to aggregate data from multiple regions and accounts Open source rules |

| EC2 | Monitor disk I/O, network I/O, CPU utilization | CloudWatch Export to S3 | 5 minutes (default) ane minute | Install and Configure the Unified Options to deliver to CloudWatch: 1) AWS CLI commands that are scheduled to evangelize the logs in Cloudwatch two) Custom code to deliver via CloudWatch SDK or API 3) Install Rsyslog (Linux) or NxLog (Windows) on EC2 to transport logs to 3rd party services like Coralogix four) CloudWatch Amanuensis (recommended) or EC2Config service running on the EC2 can push the logs Install Cloudwatch Agent on EC2 Grant permission to allow EC2 to create and write to CloudWatch Log Groups and Log Streams Edit the CloudWatch Log Amanuensis'southward configuration file to ascertain the file path of the logs on the EC2 instance. Edit the CloudWatch Log Agent's configuration file to choose a CloudWatch Group and Stream to transport the logs to Detailed Monitoring selection Stream to AWS Elasticsearch or to a third-party solution similar Coralogix via Lambda. |

| ECS | General operating system logs API actions with CloudTrail and ELK Docker and Amazon ECS container amanuensis logs Docker daemon events | /var/log/ecs binder CloudWatch | five seconds by default, tin be changed | Install awslogs Log Driver to send to CloudWatch add a LogConfiguration property to each ContainerDefinition property in your ECS task definition. Create the log grouping, and specify the log grouping inside CloudWatch Logs, then specify an AWS region and a prefix to characterization the event stream. Y'all can also enable ECS to auto-configure CloudWatch logs. Update CloudWatch Logs agent configuration file Start CloudWatch amanuensis Alternatively, install the json-file log driver. The log events are and so retrieved via the Docker Remote API using a Logspout service to centralize and ship all logs to a destination like Logstash. If using Fargate, awslogs is the only supported logDriver |

| Rubberband Load Balancer (ELB) | Access requestsChanges in availability and latency | S3 | 5 Minutes | Using the AWS Console enable access logs for ELB and select the S3 bucket where the logs should be saved. |

| Glacier | API calls | CloudTrail | 15 minutes | Via CloudTrail |

| GuardDuty | Cloudtrail Direction Events API calls Request type, source, time and more | CloudTrail | 15 minutes | Enable a CloudTrail trail to log events in an S3 saucepan |

| IAM | API calls | CloudTrail | 15 minutes | Via Cloudtrail |

| Inspector | API calls | CloudTrail | 15 minutes | Via Cloudtrail |

| Kinesis Data Firehose | Error logs for streams | CloudWatch Logs | 5 seconds past default, can be changed | Enable fault logging in the Kinesis Data Firehose console |

| Kinesis Data Streams | Log API calls for Kinesis data streams as events | CloudTrail | 15 minutes | Via CloudTrail, stored in an S3 saucepan |

| Lambda | Events Logs: Retention Used, and the Billed Duration at the terminate of each invocation (otherwise not tracked past CW metrics) | Lambda CloudWatch | five seconds by default, tin can be changed | Logging statements in code (stdout) are pushed to a CloudWatch Log Grouping linked with the Lambda function. PutLogEvents: 5 requests per second per log stream (adds additional execution time)CloudWatch groups are generated whenever you create a new Lambda function. Alternatively, stream the data straight to Amazon's hosted Elasticsearch via the CloudWatch Activity configuration. Avert creating space invocation loops: Use CloudWatch subscriptions filter to ignore this type of log Due to the AWS concurrent execution limitation, intendance must be taken to avoid reaching the limit with also many logging functions |

| Managed Streaming for Kafka – MSK | API calls | CloudTrail | 15 minutes | Via CloudTrail |

| RDS | RDS Database Log Files | RDS console, CLI, or API CloudWatch | Existent-time | View & download DB instance logs from RDS Send logs to CloudWatch for analysis, storage, and more. |

| Redshift | Audit logs: Connexion logs, user logs, user action logs Service-level logs in CloudTrail | S3 CloudTrail | A few hours | Enable logging from the Redshift panel, API, or CLI |

| Road 53 | DNS query information like domain or subdomain, DNS tape type, edge location, response, engagement and time API calls with CloudTrail | CloudWatch CloudTrail | 5 seconds past default for DNS query logs, can be changed. 15 minutes for API calls | Configure Route 53 to send logs to CloudWatch Logs to an existing or new log group. Create a CloudTrail trail that tracks API calls and stores them in an S3 saucepan. |

| S3 | Bucket-level changes logged by default Object-level changes non logged by default Service Access Logs | S3 | 1 hr | Plough on log delivery for the source S3 bucket, and enable permissions to store logs in the target S3 bucket |

| SNS | Amazon SNS Log File Entries: message delivery status, SNS endpoint response, and message dwell time Successful and unsuccessful SMS bulletin deliveries Metrics API Calls | Metrics in CloudWatch API calls in CloudTrail | 5 minutes for metrics | Configure SNS Topic to send logs via Lambda to CloudWatch and apply an IAM part with permissions to give SNS write access to use CloudWatch Logs Create a Trail in CloudTrail to save data longer than default xc days |

| SQS | API calls | CloudTrail | 15 minutes | Detailed monitoring (or one-infinitesimal metrics) is currently unavailable for Amazon SQS. |

| Step Functions | Metrics & events using CloudWatch API calls with CloudTrail | CloudWatch CloudTrail | 5 seconds for metrics and events fifteen minutes for API calls | Configure Stride Functions to send metrics and events to CloudWatch. Create a CloudTrail trail to track API calls to Step Functions. |

| STS | Successful requests to STS, who made the asking, when it was made | CloudTrail | xv minutes | Via Cloudtrail |

| Virtual Private Cloud (VPC) Logs | Monitor incoming/approachable traffic catamenia by IP for the network interfaces with your resource E.g. Monitor rejected connections, many connections from the same IP, large data transfers, brute strength RDP/SSH attack, blacklisted IPs, VPC, VPC Subnet, or Rubberband Network Interface (ENI) | CloudWatch S3 Each Network Interface receives a unique log stream | fifteen minutes | Setup per region Setup IAM Part with permissions to publish logs to S3 or the CloudWatch log group. Each ENI is candy in a Stream. Setup S3 saucepan if storing in S3. Setup Log Group if storing in CloudWatch Choose "Create flow log" per Network Interface or VPC. Cull to send either to CloudWatch or to S3. |

| WAF | All AWS WAF rules that go triggered including the reason, and by which request | Via Amazon Kinesis Data Firehose | About-real-time (configurable via buffer size and buffer interval) | Create delivery stream in Kinesis Enabling AWS WAF logging Select the Kinesis Data Firehose (within the same Region) that the logs should exist delivered to Optionally configure the Kinesis stream to ship the data to an S3 saucepan for archiving Send to AWS Elasticsearch or Coralogix for analysis Coralogix provides a predefined Lambda function to forwards your Kinesis stream straight to Coralogix. Consider redacting sensitive fields from logs before shipping. E.g. Cookies, Auth headers in Kinesis |

Related Manufactures

Source: https://coralogix.com/blog/aws-centralized-logging-guide/